Load Testing Your Storage Subsystem with Diskspd

One of the primary activities I do before bringing SQL Server into production is load testing the storage subsystem. On a new system this is critical because I want to ensure that we’re “getting what we’ve paid for” when it comes to the disk subsystem. All too often there’s a configuration issue, component mismatch, a fundamental misunderstanding of the technology or worse an insufficient disk subsystem…these all can lead to poor disk performance. Even if it’s the simplest test, its imperative to measure performance as it’s significantly harder to make changes to a SQL Server once a database is in production. So do your testing. This is especially an important topic if your disks are not direct attached or in a shared storage environment such as a SAN or VMware data store. Storage networks, controllers, shelves…it gets complicated fast!

In this article we’re going to discuss what we’re looking for when load testing your storage subsystem and introduce DiskSpd a tool for performance testing disks using varying IO patterns and sizes.

Key measurements

The two metrics of key interest to us in a disk subsystem are bandwidth and access latency. Bandwidth, often referred to as data transfer rate, is how much data can be moved in a time interval, think gigabytes per second. Access latency or access time is how long a disk transaction takes from request to the delivery of the requested data. Latency is measured in milliseconds for HDD and microseconds for solid state drives. I don’t get hung up on IOPs as much, as they’re really a function of latency. Keep your latency low and your IOPs will likely be high.

Access Patterns

The access pattern in which data is read from a drive can have significant implications on the bandwidth and latency of the request. There are two access patterns that we’re concerned with when load testing a system, sequential and random. Each with its own performance characteristics.

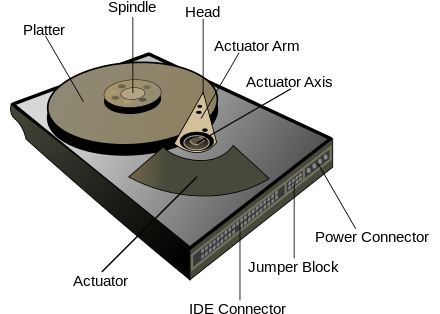

Sequential access is when an application requests a block of data and the next block requested is physically adjacent on the disk. On a hard disk drive (HDD) the drive’s head does not have to move and the disk platter simply rotates under the head to read the next block off the disk. On a solid state disk (SSD) this becomes less of a concern as SSDs access latency is constant across the drive. In SQL Server, sequential IO is analogous to table/index scans, translation log writes and database backups. When load testing a system we use sequential IO patterns to saturate the disk subsystems IO path and determine if there any physical bottlenecks limiting bandwidth between the application driving the IO and the device serving up the data…the disk drive, the storage network (interconnect) or the SAN.

Figure 1: Hard Disk Drive – Image from Wikipedia

Random access is when an application requests a block of data and the next block requested may not physically adjacent on the disk. On an HDD the drive’s head may have to move and the disk’s platter rotate to read the next block off the disk. This all contributes to our access latency. Drive vendors refer to this as seek time in drive specifications. A solid state disk is composed of an array of flash memory chips, each of which have fixed time access latency. So on an SSD if an application requests a block, the request for the next block can be serviced in a fixed amount of time, regardless of its location on the disk (there is a small amount of latency when switching between flash packages). This means random IO patterns can be serviced more efficiently as we do not have to wait for a physically moving component to access the next block…and that’s the game changer for SSDs when compared with HDDs.

Further the access latency of an IO on an SSD is measured in microseconds, this is an order of magnitude faster than an HDD which is in milliseconds. In SQL Server, random IO patterns can occur on index seeks, data file writes and operations that read from the transaction log. When load testing a system we use random IO patterns to find the overall access latency to the disk subsystem and determine if there are any components in the system that are not servicing the requests “fast enough” or in other words contributing to access latency.

Variable IO Sizes

An application can request data in variable IO sizes. For example, SQL Server can perform IOs in 8KB, 64KB, 128KB, 256KB and more. The size of the IO impacts both latency and bandwidth. A small IO can have a lower access latency as it is measured from the request of the IO until the delivery of all of the data requested. A small IO potentially can consume less bandwidth as well, as each IO translates into physical disk accesses, each of which will have some access latency. So of key importance when measuring smaller IO sizes is a low access latency, if the disk subsystem can service the IO quickly enough then we can see higher bandwidths on smaller operations. But we’re really shooting for getting the IO completed as quickly as possible.

A larger IO request can have a higher access latency since the measurement is from the beginning of the request until it is finished. Simply put a larger IO will take longer to transfer because the IO is moving more data in one operation. The IO is still has to pay the cost of the initial access latency, but usually the dominant factor in the duration of the IO is the transfer. So of key importance when measuring larger IO sizes is higher bandwidth, but still keep an eye on latency.

Diskspd

Enter Diskspd, a tool from Microsoft which allows us to performance test a disk subsystem. Diskspd allows us to define specific IO patterns, IO sizes, file sizes, read or write access, number of threads, access stride, and many more options. One key feature of Diskspd is it reports access latency time in microseconds, which is becoming more important as SSDs are becoming more common in the enterprise. SQLIO has served us well for years, but Diskspd is really the next generation of testing.

In our next post we’ll discuss how to performance test a disk subsystem using Diskspd and look at some of the key values of bandwidth, latency, and likely IOPs too.