SQL Server on Linux – External Memory Pressure with 2019 CTP2

The Scenario

Our SQL Server on Linux system is a CentOS 7.5 VM, running SQL Server 2019 (CTP2). There is 12GB of physical memory and 80% of that is dedicated to SQL Server via mssql-conf about 9.2GB, the default configuration. I’ve also set Max Server memory to 8GB for the SQL Server instance.

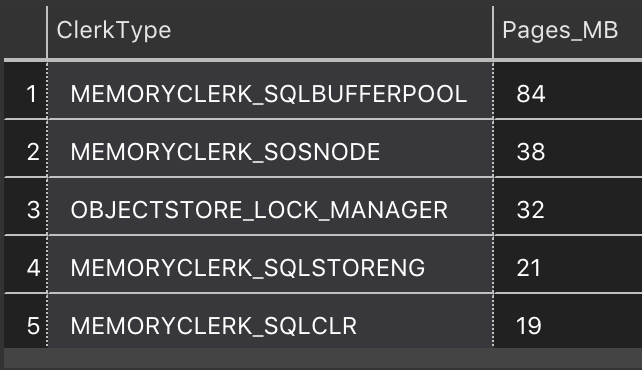

So let’s start up SQL Server on Linux 2019 CTP2 and query the SQL Server’s cache sizes when there’s no data loaded. I’m going to look in sys.dm_os_memory_clerks for memory allocation accounting information. In Figures 1 we can get a feel for the memory distribution across the system’s caches. Special shoutout to the new “Azure Data Studio” I’m using in these demos.

Getting Memory Information From Linux

We can use tools like ps, top and htop to look our are virtual and physical memory allocations. We can also look in the /proc virtual file system for our process and look at the status file. In here we’ll find the point in time status of a process, and most importantly the types of memory allocations for a process. We’ll get granular data on the virtual memory allocations and also the resident set size of the process. Here are the interesting values in the status file we’re going to focus on today.

- VmSize – total current virtual address space of the process

- VmRSS – total amount of physical memory currently allocated to the process

- VmSwap – total amount of virtual memory currently paged out to the swap file (disk)

We can use the command pidof sqlservr to find the process ID for our SQL on Linux process, in our case it’s 14689. When running SQL Server on Linux there is always two processes for sqlservr, we’re going to look the one with the higher process ID as the one with the lower PID is the watchdog process.

Now to access the status information for our process we look in /proc/14689/status

Below is the output from our process’ status file, filtering for the values we’re interested in. You can see about 16.4GB of Virtual Memory (VmSize), 7.9GB of which is in actual RAM (VmRSS) and 0MB in the swap file (VmSwap). Wait a second, 16GB of for VmSize? Yea, 16GB for VmSize. That’s the reserved allocation for the whole process. More on that in a second…

VmSize: 17165472 kB

VmRSS: 8579016 kB

VmSwap: 0 kB

VmSize: 10661380 kB

VmRSS: 8201240 kB

VmSwap: 0 kB

Phantom Memory Allocation?

Let’s Add Some Pressure

Using a small C program I wrote, I use calloc to allocate large sections of contiguous memory and then I have the program continually write data into the memory buffer to ensure those pages stay in physical memory. Using this program, let’s allocate 7GB of RAM. I’m choosing this value because its going to cause my system to exceed it’s physical memory but NOT exceed the amount virtual memory in my system when including the swap file’s size which is 5.8GB on my system. Things can get really dicey if you exceed physical and virtual memory size, processes will start to get killed. Microsoft documents a case here. Just so you know how important adding a monitor for external memory pressure is, when I allocated 7.5GB and exceeded physical + virtual memory Linux killed both the memory allocation program and SQL Server to protect the stability of the system, oomkiller is a thing to look out for!

Now, let’s look at the memory accounting information at /proc/PID/status for my memory allocator program. In the output below you can see we have just about 7GB of virtual memory (VmSize) allocated and nearly the same amount of memory that’s resident in physical memory (VmRSS) and no data in the swap file.

VmSize: 7344248 kB

VmRSS: 7340312 kB

VmSwap: 0 kB

Table 3: Process memory for external memory allocation program

Now in SQL Server 2017 with that 7GB program running would cause Linux to need to make room in physical memory for this process. Linux does this by swapping least recently used pages from memory out to disk. So under external memory pressure, let’s look at the SQL Server process’ memory allocations according to Linux. In the output below we see we still have a VmSize of around 10GB, but our VmRSS value has decreased dramatically. In fact, our VmRSS is now only 2.95GB. VmSwap has increased to 5.44GB. Wow, that’s a huge portion of the SQL Server process swapped to disk.

VmSize: 10700328 kB

VmRSS: 3098456 kB

RssAnon: 3096308 kB

VmData: 10442840 kB

VmSwap: 5711856 kB

Table 4 – Process memory for SQL Server 2017 on Linux under external memory pressure

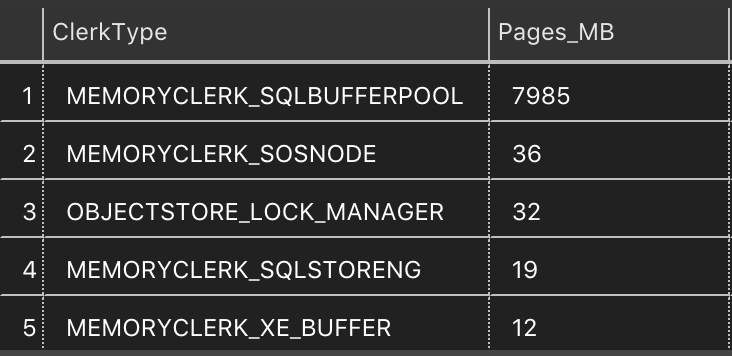

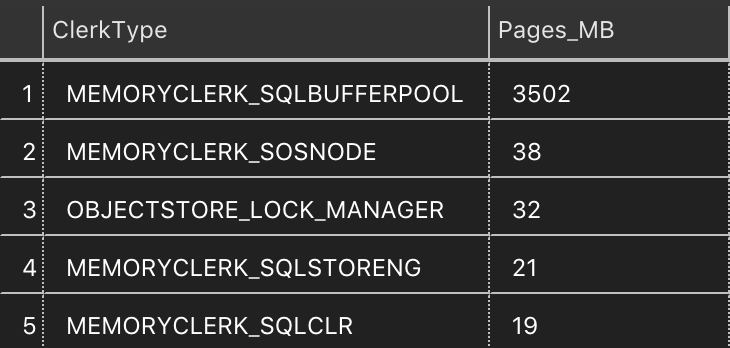

In SQL Server 2019, there’s a different outcome! In the data below we see our 16GB VmSize which won’t change much because of the virtual address space for the process. With that large external process running SQL Server reduced VmRSS from 7.9GB (from Table 1) to 2.8GB only placing about 4.68GB in the swap file. That doesn’t sound much better, does it? I thought SQL Server was going to react to the external memory pressure…let’s keep digging and ask SQL Server what it thinks about this.

VmSize: 17159552 kB

VmRSS: 2938684 kB

VmData: 16806460 kB

VmSwap: 4911120 kB

What’s SQL Server Think About This?

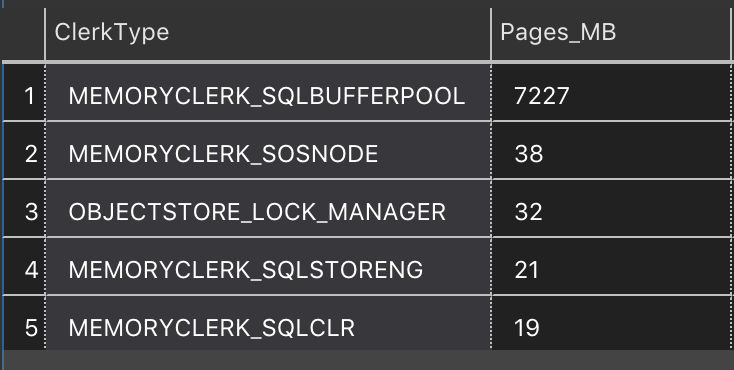

In SQL Server on Linux 2017, we saw a large chunk of the process address space swap out and we saw those pages stay resident in SQL Server’s buffer pool or really any part of the SQL Server process address space since the Linux kernel is an equal opportunity swapper…so anything in the caches managed by SQL Server didn’t know if that memory was resident in physical memory or was actually on disk. In figure 3, we see the top memory consumers after external memory pressure, our buffer pool is still fully populated with our table data.

Resource Monitor on Linux – How I think it works…

madvise(0x69274a000, 8192, MADV_DONTNEED) = 0

strace -p PID -f -c

% time seconds usecs/call calls errors syscall

—— ———– ———– ——— ——— —————-

75.98 575.483472 12188 47217 11425 futex

8.24 62.370591 275976 226 epoll_wait

7.73 58.578205 2253008 26 14 restart_syscall

3.44 26.050226 2170852 12 io_getevents

1.60 12.096475 549840 22 nanosleep

1.55 11.726022 266501 44 44 rt_sigtimedwait

0.71 5.387356 14 389517 clock_gettime

0.49 3.688034 24 154405 madvise

0.12 0.944458 803 1176 munmap

0.07 0.495154 343 1445 mmap

0.06 0.444399 7796 57 open

0.01 0.062301 1093 57 read

0.00 0.013327 579 23 23 stat

0.00 0.009003 46 196 gettid

0.00 0.005058 43 119 sched_setaffinity

0.00 0.004188 68 62 clone

0.00 0.004018 65 62 set_robust_list

0.00 0.003902 36 109 rt_sigprocmask

0.00 0.003194 56 57 fstat

0.00 0.002914 47 62 sched_getaffinity

0.00 0.002331 38 62 sigaltstack

0.00 0.001805 29 62 arch_prctl

0.00 0.001575 28 57 close

0.00 0.001182 394 3 io_submit

0.00 0.000672 42 16 sched_yield

0.00 0.000506 22 23 rt_sigaction

0.00 0.000011 11 1 fdatasync

—— ———– ———– ——— ——— —————-

100.00 757.380379 595118 11506 total

% time seconds usecs/call calls errors syscall

—— ———– ———– ——— ——— —————-

71.52 569.206916 33673 16904 4444 futex

12.46 99.164048 819538 121 epoll_wait

6.90 54.920907 2387866 23 17 restart_syscall

2.73 21.715336 1084 20033 io_getevents

2.15 17.123567 1902619 9 nanosleep

2.07 16.494124 868112 19 19 rt_sigtimedwait

1.77 14.122895 1070 13202 io_submit

0.22 1.780524 218 8180 munmap

0.10 0.769185 93 8262 mmap

0.06 0.502749 125 4026 clock_gettime

0.00 0.006171 386 16 sched_setaffinity

0.00 0.005702 62 92 read

0.00 0.004128 197 21 fstat

0.00 0.003996 118 34 sched_yield

0.00 0.003961 165 24 gettid

0.00 0.003112 346 9 arch_prctl

0.00 0.002615 291 9 sched_getaffinity

0.00 0.002528 84 30 rt_sigprocmask

0.00 0.002045 85 24 close

0.00 0.001929 92 21 open

0.00 0.001924 214 9 clone

0.00 0.001752 195 9 set_robust_list

0.00 0.001647 97 17 madvise

0.00 0.001198 599 2 fdatasync

0.00 0.000989 110 9 sigaltstack

0.00 0.000632 57 11 11 stat

0.00 0.000446 45 10 rt_sigaction

0.00 0.000351 18 19 write

0.00 0.000316 11 29 epoll_ctl

0.00 0.000310 44 7 writev

0.00 0.000159 11 15 7 readv

0.00 0.000051 26 2 socket

0.00 0.000021 11 2 setsockopt

0.00 0.000014 4 4 getcpu

0.00 0.000009 3 3 getsockname

0.00 0.000009 5 2 getpeername

0.00 0.000004 4 1 accept

0.00 0.000004 2 2 fcntl

—— ———– ———– ——— ——— —————-

100.00 795.846274 71212 4498 total